1 Introduction

Simply put, Research Software Engineering is the art of making our work deployable and functional on other machines.

1.1 Why Work Like a Software Engineer?

Why should a researcher or business analyst work Like a Software Engineer? First, because everybody and their grandmothers seem to work like software engineers. Statistical computing continues to be on the rise in many branches of research. Figure 1.1 shows the obvious trend in the sum of total R package downloads per month since 2015.

Code

library(cranlogs)

library(dplyr)

library(lubridate)

library(tsbox)

top <- cranlogs::cran_top_downloads()

packs <- cranlogs::cran_downloads(

from = "2015-01-01",

to = "2022-09-30")

packs |>

group_by(floor_date(date, "month")) |>

summarize(m = sum(count)) |>

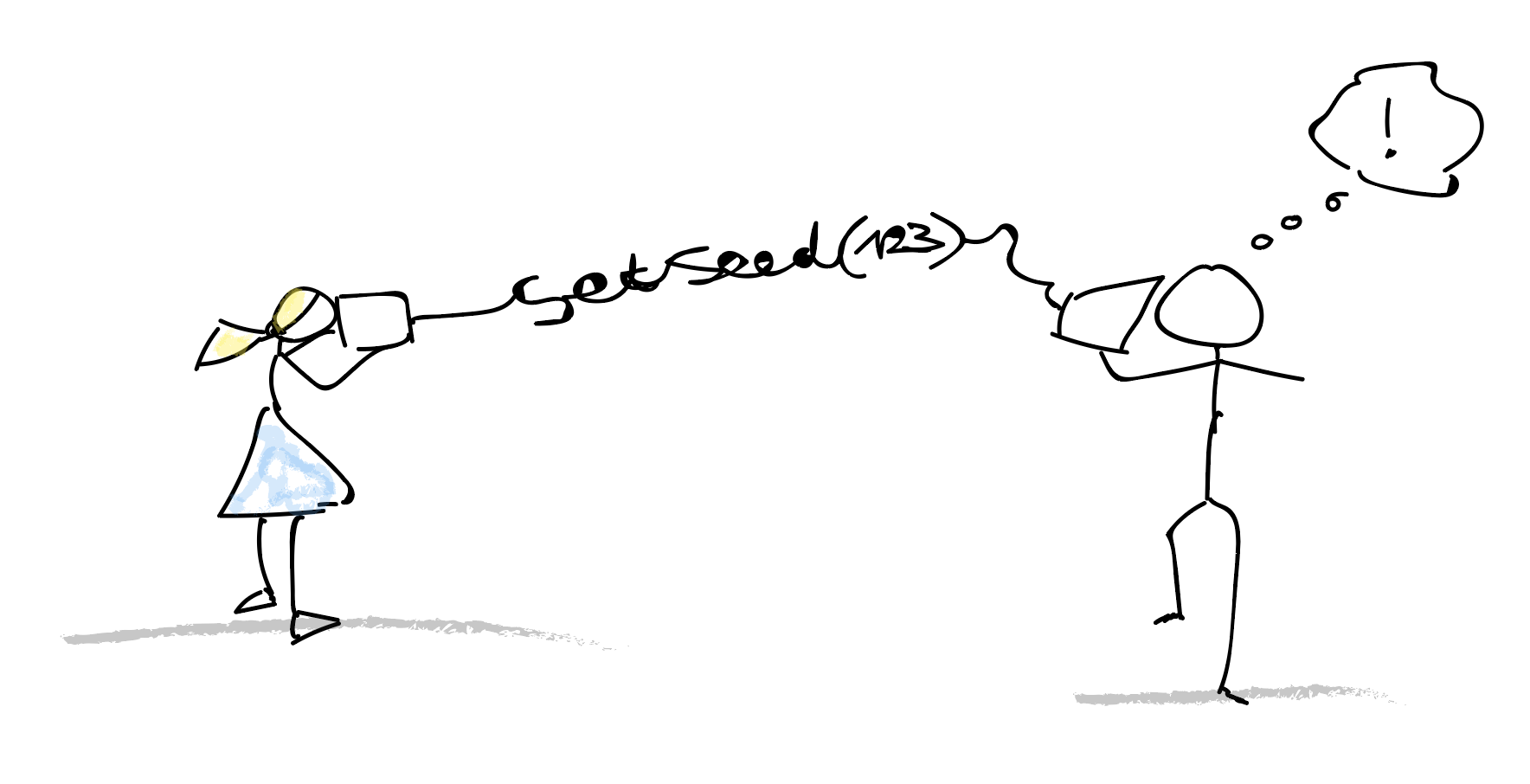

ts_plot()Bandwagonism aside, source code can be a tremendously sharp, unambiguous and international communication channel. Your web scraper does not work? Instead of reaching out in a clumsy but wordy cry for help, posting what you tried so far described by source code will often get you good answers within hours. Platforms like stackoverflow1 or Crossvalidated2 do not only store millions of questions and answers, they also gather a huge and active community to discuss issues. Plus, recent developments show that source code is not only useful to communicate with human experts. Have you tried to turn an idea into code or improve a piece of code by playing ping pong with chatGPT or Bing? Or think of feature requests: After a little code ping pong with the package author, your wish eventually becomes clearer. Let alone chats with colleagues and co-authors. Sharing code just works. Academic journals have found that out, too. Many outlets require you to make the data and source code behind your work available. Social Science Data Editors (Vilhuber et al. 2022) is a bleeding-edge project at the time of writing this, but it is already referred to by top-notch journals like American Economic Review (AER).

In addition to the above reproducibility and ability to share, code scales and automates. automation is very convenient; like when you want to download data, process and create the same visualization and put it on your website any given Sunday. automation is inevitable; like when you have to gather daily updates from different outlets or work through thousands of .pdfs.

Last but not least, programming enables you to do many things you couldn’t do without being an absolute guru (if at all) if it wasn’t for programming. Take visualization, for example. Go check the D3 Examples at https://d3js.org/. Now, try to do that in Excel. If you did these things in Excel, it would make you an absolute spreadsheet visualization Jedi, probably missing out on other time-consuming skills to master. Yet, with decent, carpentry-level programming skills you can already do so many spectacular things while not really specializing and staying very flexible.

1.2 Why Work Like an Operations Engineer?

While software development has become closer to many researchers, operations, the second part of the term DevOps, is much further away from the average researcher or business analyst. Why even think about operations? Because we can afford to do so. Operations have become so much more accessible in recent years, that many applications can be dealt with single-handedly. Though one’s production applications may still be administered by operations professionals, the ability to create and run a proof of concept from scratch is an invaluable skill. A running example says more than a 15-page specification that fails to translate business talk into tech talk. The other way around, something to look at an early stage helps to acquire funds and convince managers and other collaborators.

But people without a computer engineering background are by no means limited to proof of concepts these days. Trends like cloud computing and software-as-a-service products help developers focus on their expertise and limit the amount of knowledge needed to host a service in secure and highly available fashion.

Also, automation is key to the DevOps approach and an important reason why DevOps thinking is also very well suited for academic researchers and business analysts. So-called continuous integration can help to enforce a battery of quality checks such as unit tests or installation checks. Let’s say a push to a certain branch of a git repository leads to the checks and tests. In a typical workflow, successful completion of quality checks triggers continuous deployment to a blog, rendering into a paper or interactive data visualization.

By embracing both parts of the DevOps approach, researchers do not only gain extra efficiency, but more importantly they improve reproducibility and therefore accountability and quality of their work. The effect of a DevOps approach on quality control is not limited to reproducible research in a publication sense only, but also enforces rules during collaboration: no matter who contributes, the contribution gets gut-checked and only deployed if checks passed. Similar to the well established term software carpentry that advocates a solid, application minded understanding of programming with data, I suggest a carpentry-level understanding of development and operations is desirable for the programming data analyst.

1.3 How To Read This Book?

The focal goal of this book is to map out the open source ecosystem, identify neuralgic components and give you an idea of how to improve not only in programming but also in navigating the wonderful but vast open source world. Chapter 2 is the road map for this book: it describes and classifies the different parts of the open source stack and explains how these pieces relate to each other. Subsequent chapters highlight core elements of the open source toolbox one at a time and walk through applied examples, mostly written in the R language.

This book is the companion I wish I had when I started an empirical, data intensive PhD in economics. Yet, the book is written years after my PhD was completed and with the hindsight of more than 10 years in academia. Research Software Engineering is written based on the experience of helping students and seasoned researchers of different fields with their data management, processing and communication of results.

If you are confident in your ambition to amp up your programming to at least solid software carpentry level3 within the next few months, I suggest getting an idea of your starting point relative to this book. The upcoming backlog section is essentially a list of suggested to-dos on your way to solid software carpentry. Obviously, you may have cleared a few tasks of this backlog before reading this book which is fine. On the other hand, you might not know about some of the things that are listed in the requirement section, which is fine, too. The backlog and requirements section just mean to give you some orientation.

If you do not feel committed, revisit the previous section, discuss the need for programming with peers from your domain and possibly talk to a seasoned R or Python programmer. Motivation and commitment are key to the endurance needed to develop programming into a skill that truly leverages your domain-specific expertise.

1.4 Backlog

If you can confidently say you can check all or most of the below, you have reached carpentry level in developing and running your applications. Even if this level is your starting point, you might find a few useful tips or simply may come to cherry-pick. In case you are rather unfamiliar with most of the below, the contextualization, the classification and overview of tools is likely the most valuable help this book provides.

A solid, applied understanding of git version control and the collaboration workflow associated with git does not only help you stay ahead of your own code; git proficiency makes you a team player. If you are not familiar with commits, pulls, pushes, branches, forks and pull requests, Research Software Engineering will open up a new world for you. An introduction to industry standard collaboration makes you fit into a plethora of (software) teams around the globe – in academia and beyond.

Your backlog en route to a researcher who is comfortable in Research Software Engineering obviously contains a strategy to improve your programming itself. To work with data in the long run, an idea of the challenges of data management from persistent storage to access restrictions should complement your programming. Plus, modern data-driven science often has to handle datasets so large, which is when infrastructure other than local desktops come into play. Yet, high performance computing (HPC) is by far not the only reason it is handy for a researcher to have a basic understanding of infrastructure. Communication of results, including data dissemination or interactive online reports require the content to be served from a server with permanent online access. Basic workflow automation of regular procedures, e.g., for a repeated extract-transform-load (ETL) process to update data, is a low-hanging (and very useful) fruit for a programming researcher.

The case studies at the end of the book are not exactly a backlog item like the above but still a recommended read. The case studies in this book are hands-on programming examples – mostly written in R – to showcase tasks from application programming interface (API) usage to geospatial visualization in reproducible fashion. Reading and actually running other developers’ code not only improve one’s own code, but helps to see what makes code inclusive and what hampers comprehensibility.

1.5 Requirements

Even though Research Software Engineering aims to be inclusive and open to a broad audience with different starting points, several prerequisites exist to get the most out of this book. I recommend you have made your first experience with an interpreted programming language like R or Python. Be aware though that experience from a stats or math course does not automatically make you a programmer, as these courses are rightfully focused on their own domain. Books like R for Data Science (Wickham and Grolemund 2017) or websites like the The Carpentries4 help to solidify your data science minded, applied programming. Advanced R (Wickham 2019), despite its daunting title, is an excellent read to get past the superficial kind of understanding of R that we might acquire from a first encounter in a stats course.

A certain familiarity with console/terminal basics will help the reader sail smoothly. At the end of the day, there are no real must-have requirements to benefit from this book. The ability to self-reflect on one’s starting point remains the most important requirement to leverage the book.

Research Software Engineering willingly accepts to be overwhelming at times. Given the variety of topics touched on in an effort to show the big picture, I encourage the reader to remain relaxed about a few blanks even when it comes to fundamentals. The open source community offers plenty of great resources to selectively upgrade skills. This book intends to show how to evaluate the need for help and how to find the right sources. If none of the above means something to you, though, I recommend making yourself comfortable with the basics of some of the above fields before you start to read this book.